6 Detection of data fabrication using statistical tools

## Loading required package: viridisLiteAny field of empirical inquiry is faced with cases of scientific misconduct at some point, either in the form of fabrication, falsification, or plagiarism (FFP). Psychology faced Stapel; medical sciences faced Poldermans and Macchiarini; life sciences faced Voignet; physical sciences faced Schön — these are just a few examples of research misconduct cases in the last decade. Overall, an estimated 2% of all scholars admit to having falsified or fabricated research results at least once during their career (Fanelli 2009), which due to its self-report nature is likely to be an underestimate of the true rate of misconduct. The detection rate of data fabrication is likely to be even lower; for example, among several hundreds of thousands of researchers working in the United States and the Netherlands, only around a dozen cases become public each year. At best, this suggests a detection rate below 1% among those 2% who admit to fabricating or falsifying data — the tip of a seemingly much larger iceberg.

The ability to detect fabricated data may help deter researchers from fabricating data in their work. Deterrence theory (e.g., Hobbes 1651) states that improved detection of undesirable behaviors decreases the expected utility of said behaviors, ultimately leading to fewer people to engage in it. Detection techniques have developed differently for fabrication, falsification, and plagiarism. Plagiarism scanners have been around the longest (e.g., Parker and Hamblen 1989) and are widely implemented in practice, not only at journals but also in the evaluation of student theses (e.g., with commercial services such as Turnitin). Various tools have been developed to detect image manipulation and some of these tools have been implemented at biomedical journals to screen for fabricated or falsified images. For example, the Journal of Cell Biology and the EMBO journal scan each submitted image for potential image manipulation (The Journal of Cell Biology 2015b; Nature editors 2017), which supposedly increases the risk of detecting (blatant) image manipulation. Recently developed algorithms even allow automated scanning of images for such manipulations (Koppers, Wormer, and Ickstadt 2016). The application of such tools can also help researchers systematically evaluate research articles in order to estimate the extent to which image manipulation occurs in the literature (4% of all papers are estimated to contain manipulated images; Bik, Casadevall, and Fang 2016b) and to study factors that predict image manipulation (Fanelli et al. 2018).

Methods to detect fabrication of quantitative data are often based on a mix of psychology theory and statistics theory. Because humans are notoriously bad at understanding and estimating randomness (Haldane 1948; Tversky and Kahneman 1974; Tversky and Kahneman 1971; Nickerson 2000; Wagenaar 1972), they might create fabricated data that fail to follow the fundamentally probabilistic nature of genuine data. Data and outcomes of analyses based on these data that fail to align with the (at least partly probabilistic) processes that are assumed to underlie genuine data may indicate deviations from the reported data collecting protocol, potentially even data fabrication or falsification.

Statistical methods have proven to be of importance in initiating data fabrication investigations or in assessing the scope of potential data fabrication. For example, Kranke, Apfel, and Roewer skeptically perceived Fujii’s data (Kranke, Apfel, and Roewer 2000) and used statistical methods to contextualize their skepticism. At the time, a reviewer perceived them to be on a “crusade against Fujii and his colleagues” (Kranke 2012) and further investigation remained absent. Only when Carlisle extended the systematic investigation to 168 of Fujii’s papers for misconduct (Carlisle 2012; Carlisle and Loadsman 2016; Carlisle et al. 2015) did events cumulate into an investigation- and ultimately retraction of 183 of Fujii’s peer-reviewed papers (Oransky 2015; “Joint Editors-in-Chief request for determination regarding papers published by Dr. Yoshitaka Fujii” 2013). In another example, the Stapel case, statistical evaluation of his oeuvre occurred after he had already confessed to fabricating data, which ultimately resulted in 58 retractions of papers (co-)authored by Stapel (Levelt 2012; Oransky 2015).

In order to determine whether the application of statistical methods to detect data fabrication is responsible and valuable, we need to study their diagnostic value. Specifically, many of the developed statistical methods to detect data fabrication are quantifications of case specific suspicions by researchers, but these applications do not inform us on their diagnostic value (i.e., sensitivity and specificity) outside of those specific cases. Side-by-side comparisons of different statistical methods to detect data fabrication has also been difficult through the in-casu origin of these methods. Moreover, the efficacy of these methods based on known cases is likely to be biased, considering that an unknown amount of undetected cases is not included. Using different statistical methods to detect fabricated data using genuine versus fabricated data yields information on the sensitivity and specificity of the detection tools. This is important because of the severe professional- and personal consequences of accusations of potential research misconduct (as illustrated by the STAP case; Cyranoski 2015). These methods might have utility in misconduct investigations where the prior chances of misconduct are high, but their diagnostic value in large-scale applications to screen the literature are unclear.

In this article, we investigate the diagnostic performance of various statistical methods to detect data fabrication. These statistical methods (detailed next) have not previously been validated systematically in research using both genuine and fabricated data. We present two studies where we try to distinguish (arguably) genuine data from known fabricated data based on these statistical methods. These studies investigate methods to detect data fabrication in summary statistics (Study 1) or in individual level (raw) data (Study 2) in psychology. In Study 1, we invited researchers to fabricate summary statistics for a set of four anchoring studies, for which we also had genuine data from the Many Labs 1 initiative (https://osf.io/pqf9r; R. A. Klein et al. 2014). In Study 2, we invited researchers to fabricate individual level data for a classic Stroop experiment, for which we also had genuine data from the Many Labs 3 initiative (https://osf.io/n8xa7/; Ebersole et al. 2016). Before presenting these studies, we discuss the theoretical framework of the investigated statistical methods to detect data fabrication.

6.1 Theoretical framework

Statistical methods to detect potential data fabrication can be based either on reported summary statistics that can often be retrieved from articles or on the raw (underlying) data if these are available. Below we detail \(p\)-value analysis, variance analysis, and effect size analysis as potential ways to detect data fabrication using summary statistics. \(P\)-value analyses can be applied whenever a set of nonsignificant \(p\)-values are reported; variance analysis can be applied whenever a set of variances and accompanying sample sizes are reported for independent, randomly assigned groups; effect size analysis can be used whenever the effect size is reported or calculated (e.g., an APA reported t- or F-statistic; Hartgerink, Wicherts, and Van Assen 2017). Among the methods that can be applied to uncover potential fabrication using raw data, we consider digit analyses (i.e., the Newcomb-Benford law and terminal digit analysis) and multivariate associations between variables. The Newcomb-Benford law can be applied on ratio- or count scale measures that have sufficient digits and that are not truncated (Hill and Schürger 2005); terminal digit analysis can also be applied whenever measures have sufficient digits (see also Mosimann, Wiseman, and Edelman 1995). Multivariate associations can be investigated whenever there are two or more numerical variables available and data on that same relation is available from (arguably) genuine data sources.

6.1.1 Detecting data fabrication in summary statistics

6.1.1.1 \(P\)-value analysis

The distribution of a single or a set of independent \(p\)-values is uniform if the null hypothesis is true, while it is right-skewed if the alternative hypothesis is true (Fisher 1925). If the model assumptions of the underlying process hold, the probability density function of one \(p\)-value is the result of the population effect size, the precision of the estimate, and the observed effect size, whose properties carry over to a set of \(p\)-values if those \(p\)-values are independent.

When assumptions underlying the model used to compute a \(p\)-value are violated, \(p\)-value distributions can take on a variety of shapes. For example, when optional stopping (i.e., adding batches of participants until you have a statistically significant result) occurs and the null hypothesis is true, \(p\)-values just below .05 become more frequent (Lakens 2015a; Hartgerink et al. 2016). However, when optional stopping occurs under the alternative hypothesis or when other researcher degrees of freedom are used in an effort to obtain significance (Simmons, Nelson, and Simonsohn 2011; Wicherts et al. 2016), a right-skewed distribution for significant \(p\)-values can and will likely still occur (Ulrich and Miller 2015; Hartgerink et al. 2016).

A failure of independent \(p\)-values to be right-skewed or uniformly distributed (as would be theoretically expected) can indicate potential data fabrication. For example, in the Fujii case, baseline measurements of supposed randomly assigned groups later turned out to be fabricated. When participants are randomly assigned to conditions, measures at baseline are expected to statistically equivalent between the groups (i.e., equivalent distributions), hence, produce uniformly distributed \(p\)-values. However, in the Fujii case, Carlisle observed many large \(p\)-values, which ultimately led to the identification of potential data fabrication (Carlisle 2012). The cause of such large \(p\)-values may be that the effect of randomness is underappreciated when fabricating statistically nonsignificant data due to (for example) widespread misunderstanding of what a \(p\)-value means (Sijtsma, Veldkamp, and Wicherts 2015; Goodman 2008), which results in groups of data that are too similar conditional on the null hypothesis of no differences between the groups. As an illustration, we simulated normal distributed measurements for studies and their \(t\)-test comparisons in Table 6.1, under statistically equivalent populations (Set 1). We also fabricated independent data for equivalent groups, where we fixed the mean and standard deviation for all studies and subsequently added (too) little uniform noise to these parameters (Set 2). The expected value of a uniform \(p\)-value distribution is .5, but the fabricated data from our illustration have a mean \(p\)-value of 0.956.

| M (SD) | M (SD) | P-value | M (SD) | M (SD) | P-value | |

|---|---|---|---|---|---|---|

| Study 1 | 48.432 (10.044) | 49.158 (9.138) | 0.594 | 52.274 (10.475) | 63.872 (10.684) | 0.918 |

| Study 2 | 50.412 (10.322) | 49.925 (9.777) | 0.732 | 62.446 (10.454) | 60.899 (10.398) | 0.989 |

| Study 3 | 51.546 (9.602) | 51.336 (9.479) | 0.877 | 62.185 (10.239) | 55.655 (10.457) | 0.951 |

| Study 4 | 49.919 (10.503) | 50.857 (9.513) | 0.509 | 62.468 (10.06) | 68.469 (10.761) | 0.956 |

| Study 5 | 49.782 (11.167) | 50.308 (8.989) | 0.714 | 67.218 (10.328) | 55.846 (10.272) | 0.915 |

| Study 6 | 48.631 (9.289) | 49.29 (10.003) | 0.630 | 62.806 (11.216) | 66.746 (11.14) | 0.975 |

| Study 7 | 49.121 (9.191) | 47.756 (10.095) | 0.318 | 50.19 (10.789) | 55.724 (10.302) | 0.960 |

| Study 8 | 49.992 (9.849) | 51.651 (10.425) | 0.249 | 54.651 (11.372) | 55.336 (10.388) | 0.995 |

| Study 9 | 50.181 (9.236) | 51.292 (10.756) | 0.434 | 63.322 (11.247) | 53.734 (11.488) | 0.941 |

| Study 10 | 49.323 (10.414) | 49.879 (9.577) | 0.695 | 60.285 (10.069) | 54.645 (11.211) | 0.960 |

where \(t\) determines the range of \(p\)-values that are selected in the method. For instance, if \(t=0\), all \(p\)-values are selected, whereas if \(t=.05\) only statistically nonsignificant results are selected in the method. Note that each result’s contribution (between the brackets) is in the interval (0,1), as for the original Fisher method. The reversed Fisher method is similar (but not equivalent) to Carlisle’s method testing for excessive homogeneity across baseline measurements in RCTs (Carlisle 2017a; Carlisle 2012; Carlisle et al. 2015).

As an example, we apply the reversed Fisher method to both the genuine and fabricated results from Table 6.1. Using the threshold \(t=0.05\) to select only the nonsignificant results from Table 6.1, we retain \(k=10\) genuine \(p\)-values and \(k=10\) fabricated \(p\)-values. This results in \(\chi^2_{2\times10}=18.362,p=0.564\) for the genuine data (Set 1), and \(\chi^2_{2\times10}=66.848,p=6\times 10^{-7}\) for the fabricated data (Set 2). Another example, from the Fujii case (Carlisle 2012), also illustrates that the reversed Fisher method may detect fabricated data; the \(p\)-values related to fentanyl dose (as presented in Table 3 of Carlisle 2012) for five independent comparisons also show excessively high \(p\)-values, \(\chi^2_{2\times5}=19.335, p=0.036\). However, based on this anecdotal evidence little can be said about the sensitivity, specificity, and utility of the reversed Fisher method.

We note that incorrectly specified one-tailed tests can also result in excessive amounts of large \(p\)-values. For correctly specified one-tailed tests, the \(p\)-value distribution is right-skewed if the alternative hypothesis were true. When the alternative hypothesis is true, but the effect is in the opposite direction of the hypothesized effect (e.g., a negative effect when a one-tailed test for a positive effect is conducted), this results in a left-skewed \(p\)-value distribution. As such, any potential data fabrication detected with this method would need to be inspected for misspecified one-tailed hypotheses to preclude false conclusions. In the studies we present in this paper, misspecification of one-tailed hypothesis testing is not an issue because we prespecified the effect and its direction to the participants who were requested to fabricate data.

6.1.1.2 Variance analysis

In most empirical research papers, sample variance or standard deviation estimates are typically reported alongside means to indicate dispersion in the data. For example, if a sample has a reported age of \(M(SD)=21.05(2.11)\) we know this sample is both younger and more homogeneous than another sample with reported \(M(SD)=42.78(17.83)\).

Similar to the estimate of the mean in the data, there is sampling error in the estimated variance in the data (i.e., dispersion of the variance). The sampling error of the estimated variance is inversely related to the sample size. For example, under the assumption of normality the sampling error of a given standard deviation can be estimated as \(\sigma/\sqrt{2n}\) (p. 351, Yule 1922), where \(n\) is the sample size of the group. Additionally, if an observed random variable \(x\) is normally distributed, the standardized variance of \(x\) in sample \(j\) is \(\chi^2\)-distributed (p. 445; Hogg and Tanis 2001); that is \[\begin{equation} var(x)\sim\frac{\chi^2_{n_j-1}}{n_j-1} \tag{6.3} \end{equation}\] where \(n\) is the sample size of the \(j\)th group. Assuming equal variances of the \(J\) populations, this population variance is estimated by the Mean Squares within (\(MS_w\)) as \[\begin{equation} MS_w=\frac{\sum\limits^k_{j=1}(n_j-1)s^2_j}{\sum\limits^k_{j=1}(n_j-1)} \tag{6.4} \end{equation}\] where \(s^2_j\) is the sample variance and \(n_j\) the sample size in group \(j\). As such, under normality and equality of variances, the sampling distribution of standardized3 variances in group \(j\) (i.e., \(z^2_j\)) is \[\begin{equation} z^2_j\sim\left(\frac{\chi^2_{n_j-1}}{n_j-1}\right)/MS_w \tag{6.5} \end{equation}\]Using the theoretical sampling distribution of the standardized variances, we bootstrap the expected distribution of the dispersion of variances. In other words, we use the theoretical sampling distribution of the standard deviations to formulate a null model of the dispersion of variances that is in line with the probabilistic sampling processes for groups of equal population variances. First, we randomly draw standard deviations for all \(j\) groups according to Equation (6.3). Second, we calculate \(MS_w\) using those previously drawn values (Equation (6.4)). Third, we standardize the standard deviations using Equation (6.5). Fourth, we compute the measure of dispersion across the \(j\) groups as the standard deviation of the standardized variances (denoted \(SD_z\), Simonsohn 2013) or as the range of the standardized variances (denoted \(max_z-min_z\)). This process is repeated for \(i\) iterations to generate a parametric bootstrap distribution of the dispersion of variances according to the null model of equal variances across populations.

The observed dispersion of the variances, when compared to its expected distribution, allows a test for potential data fabrication. To this end we compute the proportion of iterations that show equally- or more extreme consistency in the dispersion of the variances to compute a bootstrapped \(p\)-value (e.g., \(P(X\leq SD_{obs})\)), with \(SD_{obs}\) the standard deviation of standardized variances and \(X\) the random variable corresponding to the standard deviation of standardized variances under the null model. In other words, we compute how many samples of \(j\) groups under the null show the observed consistency of the dispersion in the variances (or more consistent), to test whether the data are plausible given a genuine probabilistic sampling process (Simonsohn 2013). Similar to the Fisher method, this could be the result of the fabricator underappreciating the higher level sampling fluctuations, resulting in generating too little randomness (i.e., error) in the standard deviations across groups (Mosimann, Wiseman, and Edelman 1995).

As an example, we apply the variance analysis to the illustration from Table 6.1 and the Smeesters case (Simonsohn 2013). We apply the variance analysis across the standard deviations from each set in Table 6.1. For the genuinely probabilistic data (Set 1), we find that the reported mean standard deviation is 9.868 with a standard deviation equal to 0.595. For the fabricated data (Set 2), we find that the reported mean standard deviation is 10.667 with a standard deviation equal to 0.456. Such means illustrate the differences, but are insufficient to test them. Using the standard deviation of variances as the dispersion of variances measure, we can quantify how extreme this difference is using the previously outlined procedure. Results indicate that Set 1 has no excessive consistency in the dispersion of the standard deviations (\(p=0.214\)), whereas Set 2 does show excessive consistency in the dispersion of the standard deviations (\(p=0.006\)). In words, out of 100,000 randomly selected samples under the null model of independent groups with equal variances on a normally distributed measure, \(2.142\times 10^{4}\) showed less dispersion in standard deviations for Set 1, whereas only \(572\) showed less dispersion in standard deviations for Set 2. As a non-fictional example, three independent conditions from a study in the Smeesters case (\(n_j=15\)) were reported to have standard deviations 25.09, 24.58, and 25.65 (Simonsohn 2013). Here too, we can use the outlined procedure to see whether these reported standard deviations are too consistent according to sampling fluctuations of the second moment of the data according to theory. The standard deviation of these standard deviations is \(0.54\). Comparing this to 100,000 randomly selected replications under the theoretical null model, such consistency in standard deviations (or even more) would only be observed in 1.21% of those (Simonsohn 2013).

6.1.1.3 Extreme effect sizes

There is sufficient evidence that data fabrication can result in (too) large effects. For example, in the misconduct investigations in the Stapel case, large effect sizes were used as an indicator of data fabrication (Levelt 2012) with some papers showing incredibly large effect sizes that translate to explained variances of up to 95% or these effect sizes were larger than the product of the reliabilities of the related measures. Moreover, Akhtar-Danesh and Dehghan-Kooshkghazi (2003) asked faculty members from three universities to fabricate data sets and found that the fabricated data generally showed much larger effect sizes than the genuine data. From our own anecdotal experience, we have found that large effect sizes raised initial suspicions of data fabrication (e.g., \(d>20\)). In clinical trials, extreme effect sizes are also used to identify potentially fabricated data in multi-site trials while the study is still being conducted (Bailey 1991).

Effect sizes can be reported in research reports in various ways. For example, effect sizes in psychology papers are often reported as a standardized mean difference (e.g., \(d\)) or as an explained variance (e.g., \(R^2\)). A test statistic can be transformed into a measure of effect size. A test result such as \(t( 59)=3.55\) in a between-subjects design corresponds to \(d=0.924\) and \(r=0.176\) (Hartgerink, Wicherts, and Van Assen 2017). These effect sizes can readily be recomputed based on data extracted with statcheck across thousands of results (Nuijten, Hartgerink, et al. 2015; Hartgerink 2016b).

Observed effect sizes can subsequently be compared with the effect distribution of other studies investigating the same effect. For example, if a study on the ‘foot-in-the-door’ technique (Cialdini and Goldstein 2004) yields an effect size of \(r=.8\), we can collect other studies that investigate the ‘foot-in-the-door’ effect and compare how extreme that \(r=.8\) is in comparison to the other studies. If the largest observed effect size in the distribution is \(r=.2\) and a reasonable number of studies on the ‘foot-in-the-door’ effect have been conducted, an extremely large effect might be considered a flag for potential data fabrication. This method specifically looks at situations where fabricators would want to fabricate the existence of an effect (not the absence of one).

6.1.2 Detecting data fabrication in raw data

6.1.2.1 Digit analysis

The properties of leading (first) digits (e.g., the 1 in 123.45) or terminal (last) digits (e.g., the 5 in 123.45) may be examined in raw data. Here we focus on testing the distribution of leading digits based on the Newcomb-Benford Law (NBL) and testing the distribution of terminal digits based on the uniform distribution in order to detect potentially fabricated data.

For leading digits, the Newcomb-Benford Law or NBL (Newcomb 1881; Benford 1938) states that these digits do not have an equal probability of occuring under certain conditions, but rather a monotonically decreasing probability. A leading digit is the left-most digit of a numeric value, where a digit is any of the nine natural numbers (\(1,2,3,...,9\)). The distribution of the leading digit is, according to the NBL: \[\begin{equation} P(d)=log_{10}\frac{1+d}{d} \tag{6.6} \end{equation}\]where \(d\) is the natural number of the leading digit and \(P(d)\) is the probability of \(d\) occurring. Table 6.2 indicates the expected leading digit distribution based on the NBL. This expected distribution is typically compared to the observed distribution using a \(\chi^2\)-test (\(df=9-1\)). In order to make such a comparison feasible, it requires a minimum of 45 observations based on the rule of thumb outlined by Agresti (2003) (\(n=I\times J\times 5\), with \(I\) rows and \(J\) columns). The NBL has been applied to detect financial fraud (e.g., Cho and Gaines 2007), voting fraud (e.g., Durtschi, Hillison, and Pacini 2004), and also problems in scientific data (Hüllemann, Schüpfer, and Mauch 2017; Bauer and Gross 2011).

| Digit | Proportion |

|---|---|

| 1 | 0.301 |

| 2 | 0.176 |

| 3 | 0.125 |

| 4 | 0.097 |

| 5 | 0.079 |

| 6 | 0.067 |

| 7 | 0.058 |

| 8 | 0.051 |

| 9 | 0.046 |

However, the NBL only applies under specific conditions that are rarely fulfilled in the social sciences. Hence, its applicability for detecting data fabrication in science can be questioned. First, the NBL only applies for true ratio scale measures (Hill 1995; Berger and Hill 2011). Second, sufficient range on the measure is required for the NBL to apply (i.e., range from at least \(1-1000000\) or \(1-10^6\); Fewster 2009). Third, these measures should not be subject to digit preferences, for example due to psychological preferences for rounded numbers. Fourth, any form of truncation undermines the NBL (Nigrini 2015). Moreover, some research has even indicated that humans might be able to fabricate data that are in line with the NBL (Diekmann 2007; Burns 2009), immediately undermining the applicability of the NBL in context of detecting data fabrication.

For terminal digits, analysis is based on the principle that the rightmost digit is the most random digit of a number, hence, is expected to be uniformly distributed under specific conditions (Mosimann, Wiseman, and Edelman 1995; Mosimann and Ratnaparkhi 1996). Terminal digit analysis is also conducted using a \(\chi^2\)-test (\(df=10-1\)) on the digit occurrence counts (including zero), where the observed frequencies are compared with the expected uniform frequencies. The rule of thumb outlined by Agresti (2003) indicates at least 50 observations are required to provide a meaningful test of the terminal digit distribution (\(n=I\times J \times 5\), with \(I\) rows and \(J\) columns). Terminal digit analysis was developed during the Imanishi-Kari case by Mosimann and Ratnaparkhi (1996; for a history of this decade long case, see Kevles 2000).

Figure 6.1 depicts simulated digit counts for the first- through fifth digit of a random, standard normally distributed variable (i.e., \(N\sim(0,1)\)). The first- and second digit distributions are clearly non-uniform, whereas the third digit distribution seems only slightly non-uniform. As such, the rightmost digit can be expected to be uniformly distributed if sufficient precision is provided (Mosimann, Wiseman, and Edelman 1995). What sufficient precision is, depends on the process generating the data. In our example with \(N\sim(0,1)\), the distribution of the third and later digits seem well-approximated by the uniform distribution.

Figure 6.1: Frequency distributions of the first-, second-, and third digits. We sampled 100,000 values from a standard normal distribution to create these digit distributions.

6.1.2.2 Multivariate associations

Variables or measurements included in one study can have multivariate associations that might be non-obvious to researchers. Hence, such relations between variables or measurements might be overlooked by people who fabricate data. Fabricators might also simply be practically unable to fabricate data that reflect these multivariate associations, even if they are aware of these associations. For example, in response time latencies, there typically is a positive relation between mean response time and the variance of the response time. Given that the genuine multivariate relations between different variables arise from stochastic processes and are not readily known in either their form or size, these might be difficult to take into account for someone who wants to fabricate data. As such, using multivariate associations to discern fabricated data from genuine data might prove worthwhile.

The multivariate associations between different variables can be estimated from control data that are (arguably) genuine. For example, if the multivariate association between means (\(M\)s) and standard deviations (\(SD\)s) is of interest, control data for that same measure can be collected from the literature. With these control data, a meta-analysis provides an overall estimate of the multivariate relation that can subsequently be used to verify the credibility of a set of statistics.

Specifically, the multivariate associations from the genuine data are subsequently used to estimate the extremity of an observed multivariate relation in investigated data. Consider the following fictitious example, regarding the multivariate association between \(M\)s and \(SD\)s for a response latency task mentioned earlier. Figure 6.2 depicts a (simulated) population distribution of the association (e.g., a correlation) between \(M\)s and \(SD\)s from the literature (\(N\sim(.123, .1)\)). Assume we have two papers, each coming from a pool of direct replications providing an equal number of \(M\)s and corresponding \(SD\)s. Associations between these statistics are \(0.5\) for Paper 1 and \(0.2\) for Paper 2. From Figure 6.2 we see that the association in Paper 1 has a much higher percentile score in the distribution (i.e., \(99.995\)th percentile) than that of Paper 2 (i.e., \(78.447\)th percentile).

Figure 6.2: Distribution of 100 simulated observed associations between Ms and SDs for a response latency task; simulated under \(N(.123,.1)\). The red- and blue dots indicate observed multivariate associations from fictitious papers. Paper 1 may be considered relatively extreme and of interest for further inspection; Paper 2 may be considered relatively normal.

6.2 Study 1 - detecting fabricated summary statistics

We tested the performance of statistical methods to detect data fabrication in summary statistics with genuine and fabricated summary statistics with psychological data. We asked participants to fabricate data that were supposedly drawn from a study on the anchoring effect (Tversky and Kahneman 1974; Jacowitz and Kahneman 1995). The anchoring effect is a well-known psychological heuristic that uses the information in the question as the starting point for the answer, which is then adjusted to yield a final estimate of a quantity. For example:

Do you think the percentage of African countries in the UN is above or below [10% or 65%]? What do you think is the percentage of African countries in the UN?

In their classic study, Tversky and Kahneman (1974) varied the anchor in this question between 10% and 65% and found that they yielded mean responses of 25% and 45%, respectively (Tversky and Kahneman 1974). We chose the anchoring effect because it is well known and because a considerable amount of (arguably) genuine data sets on the anchoring heuristic are freely available (https://osf.io/pqf9r; R. A. Klein et al. 2014). This allowed us to compare data knowingly and openly fabricated by our participants (researchers in psychology) to actual data that can be assumed to be genuine because they were draw from a large-scale international project involving many contributing labs (a so-called Many Labs study). Our data fabrication study was approved by Tilburg University’s Ethics Review Board (EC-2015.50; https://osf.io/7tg8g/).

6.2.1 Methods

We collected genuine summary statistics from the Many Labs study and fabricated summary statistics from our participating fabricators for four anchoring studies: (i) distance from San Francisco to New York, (ii) human population of Chicago, (iii) height of the Mount Everest, and (iv) the number of babies born per day in the United States (Jacowitz and Kahneman 1995). Each of the four (genuine or fabricated) studies provided us with summary statistics in a 2 (low/high anchoring) \(\times\) 2 (male/female) factorial design. Our analysis of the data fabrication detection methods used the summary statistics (i.e., means, standard deviations, and test results) of the four anchoring studies fabricated by each participant or the four anchoring studies that had actually been conducted by each participating lab in the Many Labs project (R. A. Klein et al. 2014). The test results available are the main effect of the anchoring condition, the main effect of gender, and the interaction effect between the anchoring conditions and gender conditions. For current purposes, a participant is defined as researcher/lab where the four anchoring studies’ summary statistics originate from. All materials, data, and analyses scripts are freely available on the OSF (https://osf.io/b24pq) and a preregistration is available at https://osf.io/tshx8/. Throughout this report, we will indicate which facets were not preregistered or deviate from the preregistration (for example by denoting “(not preregistered)” or “(deviation from preregistration)”) and explain the reason of the deviation.

6.2.1.1 Data collection

We downloaded thirty-six genuine data sets from the publicly available Many Labs (ML) project (https://osf.io/pqf9r; R. A. Klein et al. 2014). The ML project replicated several effects across thirty-six locations, including the anchoring effect in the four studies mentioned previously. Considering the size of the ML project, the transparency of research results, and minimal individual gain for fabricating data, we felt confident to assume these data are genuine. For each of the thirty-six labs we computed three summary statistics (i.e., sample sizes, means, and standard deviations) for each of the four conditions in the four anchoring studies (i.e., \(3\times4\times4\); data: https://osf.io/5xgcp/). We computed these summary statistics from the raw ML data, which were cleaned using the original analysis scripts from the ML project.

The sampling frame for the participants asked to fabricate data consisted of 2,038 psychology researchers who published a peer-reviewed paper in 2015, as indexed in Web of Science (WoS) with the filter set to the U.S. We sampled psychology researchers to improve familiarity with the anchoring effect (Tversky and Kahneman 1974; Jacowitz and Kahneman 1995). We filtered for U.S. researchers to ensure familiarity with the imperial measurement system, which is the scale of some of the anchoring studies and in order to reduce heterogeneity across fabricators.4 We searched WoS on October 13, 2015. In total, 2,038 unique corresponding e-mails were extracted from 2,014 papers (due to multiple corresponding authors).

From these 2,038 psychology researchers, we e-mailed a random sample of 1,000 researchers to participate in our study (April 25, 2016; osf.io/s4w8r). We used Qualtrics and removed identifying information not essential to the study (e.g., no IP-addresses saved). We informed the participating researchers that the study would require them to fabricate data and explicitly mentioned that we would investigate these data with statistical methods to detect data fabrication. We also clarified to the participants that they could stop at any time without providing a reason. If they wanted, participants received a $30 Amazon gift card as compensation for their participation if they were willing to enter their email address. They could win an additional $50 Amazon gift card if they were one of three top fabricators (participants were not informed about how we planned to detect data fabrication; the procedure for this is explained in the Data Analysis section). We did not inform participants about how we planned to detect data fabrication. The provided e-mail addresses were unlinked from individual responses upon sending the bonus gift cards. The full Qualtrics survey is available at osf.io/rg3qc.

Each participant was instructed to fabricate 32 summary statistics (4 studies \(\times\) 2 anchoring conditions \(\times\) 2 sexes \(\times\) 2 statistics [mean and SD]) that corresponded to three hypotheses. We instructed participants to fabricate results for the following hypotheses: there is (i) a positive main effect of the anchoring condition, (ii) no effect of sex, and (iii) no interaction effect between condition and sex. We fixed the sample sizes in the fabricated anchoring studies to 25 per cell so that participants did not need to fabricate sample sizes. These fabricated summary statistics and their accompanying test results for these three hypotheses serve as the data to examine the properties of statistical tools to detect data fabrication.

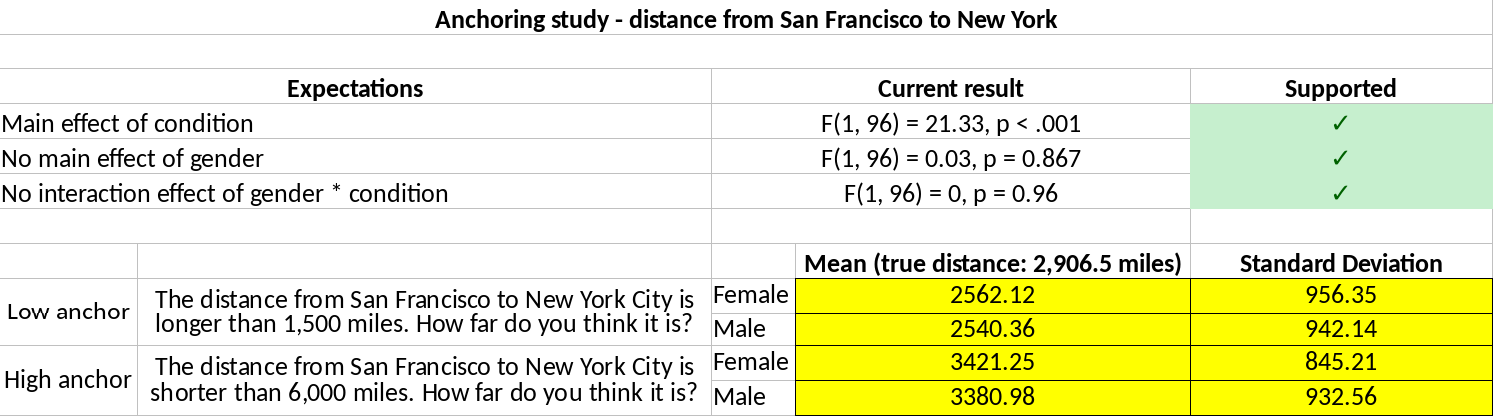

We provided participants with a template spreadsheet to fill out the fabricated data, in order to standardize the fabrication process without restraining the participant in how they chose to fabricate data. Figure 6.3 depicts an example of this spreadsheet (original: https://osf.io/w6v4u). We requested participants to fill out the yellow cells with fabricated data, which included means and standard deviations for the four conditions. Using these values, the spreadsheet automatically computed statistical tests and immediately showed them in the “Current result” column instantaneously. If these results supported the (fabrication) hypotheses, a checkmark appeared as depicted in Figure 6.3. We required participants to copy-paste the yellow cells into Qualtrics. This provided a standardized response format that could be automatically processed in the analyses. Technically, participants could provide a response that did not correspond to the instructions but none of them did.

Figure 6.3: Example of a filled out template spreadsheet used in the fabrication process of Study 1. Respondents fabricated data in the yellow cells, which were used to automatically compute the results of the hypothesis tests, shown in the column “Current result”. If the fabricated data confirm the hypotheses, a checkmark appeared in a green cell (one of four template spreadsheets available at https://osf.io/w6v4u).

Upon completion of the data fabrication, we debriefed respondents within Qualtrics (full survey: osf.io/rg3qc/). Respondents self-rated their statistical knowledge (1 = extremely poor, 10 = excellent), what statistical analysis programs they used frequently (i.e., at least once per week), whether they had ever conducted an anchoring study themselves, whether they used a random number generator to fabricate data in this study, whether they fabricated raw data to get summary statistics, how many combinations of means and standard deviations they created for each study (on average), and a free-text description of their fabrication procedures per study. Lastly we reminded participants that data fabrication is widely condemned by professional organizations, institutions, and funding agencies alike. This reminder was intended to minimize potential carry-over effects of the unethical behavior into actual research practice (Mazar, Amir, and Ariely 2008). Using quotum sampling, we collected as many responses as possible for the available 36 rewards, resulting in 39 fabricated data sets (https://osf.io/e6zys; 3 participants did not participate for a bonus).

6.2.1.2 Data analysis

We analyzed the genuine and fabricated data sets (36 and 39, respectively), with each data set consisting of summary statistics of four anchoring studies. The data set is the unit of analysis. Four types of analyses are conducted on each of the 75 data sets; (i) the reversed Fisher method, (ii) variance analyses, (iii) the Fisher method applied to the results of the former two, and (iv) analysis of the effect sizes of the statistically significant anchoring effect of the four anchoring studies. Per type of analysis, we examine if we can distinguish the 36 genuine from the 39 fabricated data sets, mainly using Area Under Receiving Operator Characteristic (AUROC) curves. Below we first describe each of the four types of analyses, followed by a description of the AUROC curve analysis.

We conducted two analyses to detect data fabrication using the reversed Fisher method. More specifically, we conducted one reversed Fisher method analysis for the four statistically nonsignificant results of the gender effect (one per anchoring study) and one for the four statistically nonsignificant interaction effects (one per anchoring study). This results in two reversed Fisher method results (based on \(k\)=4) per data set.

For the variance analyses, we substantially deviated from the preregistration (https://osf.io/tshx8/) and added multiple analyses. We analyzed the 16 sample variances (four anchoring studies \(\times\) four conditions per anchoring study) per lab or participant in fourteen different ways. Each of the fourteen variance analyses was conducted using two dispersion of variance measures. One measure inspects the standard deviation of the sample variances (i.e., \(SD_z\)); one measure inspects the range of the sample variances (i.e., \(max_z-min_z\)); we ran all 28 analyses with 100,000 iterations from which we computed the bootstrapped \(p\)-value (see also the Theoretical Framework). Of these 28 variance analyses (14 for each dispersion of variances measure), only one was preregistered. This was the variance analysis combining all 16 sample variances of the four anchoring studies. Upon analyzing the results of this preregistered variance analysis, however, we realized that the variance analyses assume that the included variances are from the same population distribution. Assuming homogeneous populations of variances is unrealistic for the four very different anchoring conditions or studies (i.e., they have outcome measures on very different scales, such as distances in miles and babies born). Hence, we included variance analyses based on subgroups, where we analyzed each anchoring study separately (four variance analyses) or analyzed each anchoring condition of each study separately (i.e., the low/high anchoring condition collapsed across gender; eight variance analyses). We also conducted one variance analysis that combined all variances across studies but takes into account the subgroups per anchoring condition per study.

We also combined the reversed Fisher method results with the results from the variance analyses using the original Fisher method. More specifically, we combined the results from the two reversed Fisher method analyses (one analysis for the four gender effects and one analysis for the four interaction effects) with the preregistered variance analysis (the result of this analysis was used to determine the three most difficult to detect fabricated datasets and subsequently to reward the ‘best fabricators’). We additionally applied the Fisher method to results of the reversed Fisher method (two results) with three different combinations of results of the variance analyses; based on variance analyses per anchoring study (four results), per anchoring study \(\times\) condition combination (eight results), and across all studies and conditions but taking into account heterogeneous variances per anchoring condition for each study (one result). Hence, the additional Fisher method analyses were based on six, ten, and three results, respectively. Throughout these combinations, we only use the \(SD_z\) dispersion of variance measure for parsimony. Note that the performance of the Fisher method combining results of various analyses (the reversed Fisher method and the variance analyses) as we do here is naturally dependent on the performance of the individual results included in the combination; if all included results perform well the Fisher method is bound to perform well and vice versa.

Finally, we looked at statistically significant effect sizes. We expected fabricated statistically significant effects to be larger than genuine statistically significant effects. As such, we compared the 75 statistically significant anchoring effects for each of the four anchoring studies separately (not preregistered).

For each of the previously described statistical methods to detect data fabrication, we carried out AUROC curve analyses. AUROC analyses summarize the sensitivity (i.e., True Positive Rate [TPR]) and specificity (i.e., True Negative Rate [TNR]) for various decision criteria (e.g., \(\alpha=0, .01, .02, ..., .99, 1\)). For our purposes, AUROC values indicate the probability that a randomly drawn fabricated and genuine dataset can be correctly classified as fabricated or genuine based on the result of the analysis (Hanley and McNeil 1982). In other words, if \(AUROC=.5\), correctly classifying a randomly drawn dataset as fabricated (or genuine) is equal to 50% (assuming equal prevalences). For this setting, we follow the guidelines of Youngstrom (2013) and regard any AUROC value \(<.7\) as poor for detecting data fabrication, \(.7\leq\) AUROC \(<.8\) as fair, \(.8\leq\) AUROC \(<.9\) as good, and AUROC \(\geq.9\) as excellent. We conducted all analyses using the pROC package (Robin et al. 2011).

6.2.2 Results

Figure 6.4 shows a group-level comparison of the genuine- (\(k=36\)) and fabricated (\(k=39\)) datasets, which contain four \(p\)-values and relevant effect sizes (\(r\)) for each type of effect (gender, anchoring, interaction) per dataset (i.e., \(75\times4\) data points for each plot). These group-level comparisons provide a general overview of the differences between the genuine and fabricated data. Figure 6.4 (right and left column) already indicates that there are few systematic differences between fabricated and genuine summary statistics from the anchoring studies when statistically nonsignificant effects are inspected (i.e., gender and interaction hypotheses). However, there seem to be larger differences when we required participants to fabricate statistically significant summary statistics (i.e., anchoring hypothesis; middle column). We discuss results bearing on the specific tests for data fabrication next.

Figure 6.4: Density distributions of genuine and fabricated summary statistics across four anchoring studies, per effect (gender, anchoring, or interaction across columns) and type of result (p-value or effect size across rows).

6.2.2.1 \(P\)-value analysis

When we applied the reversed Fisher method to the statistically nonsignificant effects, results indicated its performance is approximately equal to chance classification. We found \(AUROC=0.501\), 95% CI [\(0.468\)-\(0.535\)] for statistically nonsignificant gender effects and \(AUROC=0.516\), 95% CI [\(0.483\)-\(0.549\)] for statistically nonsignificant interaction effects. For the gender effects, we classified 12 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 6 of the 36 genuine summary statistics as fabricated (results per respondent available at osf.io/a6jb4). For the interaction effects, we classified 11 of the 39 fabricated summary statistics (\(\alpha=.01\)) and 8 of the 36 genuine summary statistics as fabricated (results per respondent available at osf.io/jz57p). In other words, results from this sample indicated that detection of fabricated data using the distribution of statistically nonsignificant \(p\)-values to detect excessive amounts of high \(p\)-values does not seem promising.

6.2.2.2 Variance analysis

We expected the dispersion of variances to be lower in fabricated data as opposed to genuine data. We computed the AUROC values for the variance analyses with the directional hypothesis that genuine data shows more variation than fabricated data, using either the dispersion of variance as captured by the standard deviation of the variances (i.e., \(SD_z\)) or the range of the variances (i.e., \(max_z-min_z\)). AUROC results of all 14 analyses (as described in the Data analysis section) are presented in Table 6.3, one result for each dispersion of variance measure. Of these 14 results, we only preregistered the variance analysis inspecting the standardized variances across all studies under both the \(SD_z\) and \(max_z-min_z\) operationalizations, assuming unrealistically homogeneous population variances (https://osf.io/tshx8/; second row of Table 6.3). As we did not preregister the other variance analyses, these should be considered exploratory.

| Population variance assumption | Study | \(SD_z\) | \(max_{z}-min_{z}\) |

|---|---|---|---|

| Heterogeneity | Overall | 0.761 [0.733-0.788] | 0.827 [0.8-0.853] |

| Homogeneity | Overall | 0.264 [0.235-0.293] | 0.544 [0.507-0.58] |

| Homogeneity | Study 1 | 0.373 [0.339-0.406] | 0.488 [0.474-0.502] |

| Homogeneity | Study 2 | 0.395 [0.36-0.429] | 0.634 [0.608-0.66] |

| Homogeneity | Study 3 | 0.498 [0.463-0.533] | 0.563 [0.539-0.588] |

| Homogeneity | Study 4 | 0.401 [0.367-0.435] | 0.561 [0.527-0.594] |

| Heterogeneity | Study 1, low anchoring | 0.438 [0.406-0.47] | 0.487 [0.481-0.493] |

| Heterogeneity | Study 1, high anchoring | 0.615 [0.582-0.647] | 0.501 [0.492-0.51] |

| Heterogeneity | Study 2, low anchoring | 0.652 [0.621-0.683] | 0.625 [0.607-0.643] |

| Heterogeneity | Study 2, high anchoring | 0.556 [0.523-0.589] | 0.528 [0.515-0.541] |

| Heterogeneity | Study 3, low anchoring | 0.643 [0.612-0.674] | 0.542 [0.53-0.553] |

| Heterogeneity | Study 3, high anchoring | 0.747 [0.719-0.775] | 0.691 [0.669-0.712] |

| Heterogeneity | Study 4, low anchoring | 0.667 [0.636-0.697] | 0.595 [0.577-0.614] |

| Heterogeneity | Study 4, high anchoring | 0.798 [0.773-0.823] | 0.756 [0.733-0.779] |

Under the (in hindsight unrealistic) assumption of homogeneous population variances, our preregistered variance analyses did not perform above chance level. Using the standard deviation of the variances (i.e., \(SD_z\)) as dispersion of variance measure, the results are: \(AUROC=0.264\), 95% CI [\(0.235\)-\(0.293\)]. With this statistic, we classified 0 of the 39 fabricated summary statistics (\(\alpha=.01\)) and 0 of the 36 genuine summary statistics as fabricated (results per respondent available at osf.io/9cjdh). Using the range of the variances (i.e., \(max_z-min_z\)) as dispersion of variance, the results are: \(AUROC=0.544\), 95% CI [\(0.507\)-\(0.58\)].

With this statistic, we detected 39 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 36 of the 36 genuine summary statistics as fabricated (results per respondent available at osf.io/2ts6b). Comparing the results between \(SD_z\) and \(max_z-min_z\) indicates that the range of the variances measure seems more robust to the violations of the assumption of homogeneous variances than the standard deviation of the variances measure. Overall these results indicate that a violation of the homogeneity assumption may undermine analyses on heterogeneous variances. These assumptions should be made more explicit and checked whenever possible, to prevent improper use.

We conducted exploratory analyses that take into account the heterogeneity of variances across conditions and studies, which sometimes also resulted in improved performance to detect data fabrication. Analyses separated per study or anchoring condition show variable \(AUROC\) results (ranging from 0.373-0.798; rows 3-14 in Table 6.3). Using the standard deviation of variances (i.e., \(SD_z\); row 1 in Table 6.3) in a heterogeneous manner across the conditions and studies, \(AUROC=0.761\), 95% CI [\(0.733\)-\(0.788\)]. With this statistic, we classified 9 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 0 of the 36 genuine summary statistics (results per respondent available at osf.io/srpg9). Using the range of variances (i.e., \(max_z-min_z\)) in a heterogeneous manner across the conditions and studies, \(AUROC=0.827\), 95% CI [\(0.8\)-\(0.853\)]. With this statistic, we classified the same 9 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 0 of the 36 genuine summary statistics (results per respondent available at osf.io/93rek).

6.2.2.3 Combining \(p\)-value and variance analyses

Our preregistered analysis combined the homogeneous variance analysis across studies and conditions with the \(p\)-value analyses of the gender and interaction effects. This combined analysis yielded \(AUROC=0.58\), 95% CI [0.548-0.611]. With this statistic, we classified 19 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 16 of the 36 genuine summary statistics (results per respondent available at osf.io/hq29t). Given that the combination method would be expected to perform not much better than its constituent results it logically follows that the combination of \(p\)-values and variance analyses performs this poorly.

The poor performance is in part is due to the unrealistic assumption of homogeneous variances in the variance analysis; we explored the efficacy of other combinations that loosen this assumption. First, we split the variance analyses per study and included four variance analysis results instead of one when we analyzed them overall; \(AUROC=0.605\), 95% CI [0.573-0.636]. With this statistic, we classified 20 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 13 of the 36 genuine summary statistics (results per respondent available at osf.io/r8pf5). Second, we split the variance analyses further, splitting across conditions within studies. This adds another four variance analyses (a total of eight); \(AUROC=0.684\), 95% CI [0.655-0.714]. With this statistic, we classified 25 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 15 of the 36 genuine summary statistics (results per respondent available at osf.io/sv35k). Finally, we replaced the original homogeneous variance analysis (row 2 in Table 6.3) with the overall and heterogeneous variance analysis (row 1 in Table 6.3); \(AUROC=0.647\), 95% CI [0.616-0.677]. With this statistic, we classified 23 of the 39 fabricated summary statistics as fabricated (\(\alpha=.01\)) and 16 of the 36 genuine summary statistics (results per respondent available at osf.io/zt3nk). As the \(AUROC\)s of the combination method did not exceed that of the variance analyses alone, we conclude that the combination method failed to outperform the variance analyses.

6.2.2.4 Extreme effect sizes

Using the statistically significant effect sizes from the anchoring studies, we differentiated between the fabricated and genuine results fairly well. Figure 6.4 (middle column, second row) indicates that the fabricated statistically significant effects were considerably different from the genuine ones. When we inspected the effect size distributions (\(r\)), we saw that the median fabricated effect size across the four studies was \(0.891\) whereas the median genuine effect size was considerably smaller (\(0.661\); median difference across the four anchoring effects \(0.23\)). In contrast to the fabricated nonsignificant effects, which resembled the genuine data quite well, the statistically significant effects seem to have been harder to fabricate for the participants. More specifically, the \(AUROC\) for the studies approximate .75 each (\(0.743\), 95% CI [\(0.712\)-\(0.774\)]; \(0.734\), 95% CI [\(0.702\)-\(0.767\)]; \(0.737\), 95% CI [\(0.706\)-\(0.768\)]; \(0.755\), 95% CI [\(0.724\)-\(0.786\)]; respectively). Figure 6.5 depicts the density distributions of the genuine and fabricated effect sizes per anchoring study, which shows the extent to which the density of the fabricated effect sizes exceeds the maximum of the genuine effect sizes. For instance, the percentage of fabricated statistically significant anchoring effect sizes that is larger than all 36 genuine statistically significant anchoring effect sizes is 59% in Study 1, 64.1% in Study 2, 53.8% in Study 3, and 66.7% in Study 4. Based on these results, it seems that using extreme effect sizes to detect potential data fabrication may be is a parsimonious and fairly effective method.

Figure 6.5: Density distributions of genuine and fabricated anchoring effect sizes for each of the four anchoring studies.

6.2.2.5 Fabricating effects with Random Number Generators (RNGs)

Fabricated effects might seem more genuine when participants used Random Number Generators (RNGs). RNGs are typically used in computer-based simulation procedures where data are generated that are supposed to arise from probabilistic processes. Given that our framework of detecting data fabrication rests on the lack of intuitive understanding of humans at drawing values from probability distributions, those participants who used an RNG might come closer to fabricating seemingly genuine data, leading to more difficult to detect fabricated data. The analyses presented next were not preregistered.

We split our analyses for those 11 participants who indicated using RNGs and the remaining 28 participants who indicated not to have used RNGs. Figure 6.6 shows the same density distributions as in Figure 6.4, except that this time the density distributions of the fabricated data are split between these two groups.

Figure 6.6: Density distributions of p-values and effect sizes for the gender effect, the anchoring effect, and the interaction effect across the four anchoring studies. This figure is similar to Figure 6.4, except that each panel now separates the density distributions for fabricated results using a random number generator (RNG), fabricated results without using a RNG, and genuine effects. Respondents self-selected to use (or not use) RNGs in their fabrication process.

Figure 6.6 suggests that using RNGs may have resulted in less exaggerated anchoring effect sizes, but still larger than genuine ones. Furthermore, it seems that the use of RNGs produced somewhat more uniformly distributed statistically nonsignficant \(p\)-values than those without RNGs. For effect sizes, Table 6.4 specifies the differences in sample estimates of the \(AUROC\) between the groups of fabricated results with and without RNGs (as compared to the genuine data). These results indicate that the fabricated effect sizes from participants who used RNGs are relatively more difficult to detect compared to data from participants who did not use a RNG (illustratively, the simple mean of the left column of Table 6.4 is 0.604 compared to the right column simple mean of 0.797). The numbers presented inTable 6.4 can be interpreted as the probability that the larger effect is fabricated, when presented with one genuine and fabricated effect size. For nonsignificant \(p\)-values, we obtained the following \(AUROC\) values; gender, with RNG \(AUROC=0.455\) 95% CI [\(0.405\)-\(0.504\)], without RNG \(AUROC=0.52\) 95% CI [\(0.482\)-\(0.557\)]; interaction, with RNG \(AUROC=0.601\) 95% CI [\(0.558\)-\(0.644\)], without RNG \(AUROC=0.482\) 95% CI [\(0.444\)-\(0.52\)]). For the best performing variance analysis (i.e., heterogeneity over all four anchoring studies with \(max_z-min_z\)) classification performance does not seem to be systematically different between those data fabricated with (\(AUROC=0.78\) 95% CI [\(0.728\)-\(0.833\)]) or without RNGs (\(AUROC=0.845\) 95% CI [\(0.817\)-\(0.874\)]).

| Study | \(AUROC\) RNG, \(k=11\) | \(AUROC\) no RNG, \(k=28\) |

|---|---|---|

| Study 1 | 0.553 [0.489-0.617] | 0.817 [0.785-0.85] |

| Study 2 | 0.641 [0.578-0.705] | 0.771 [0.734-0.807] |

| Study 3 | 0.578 [0.512-0.645] | 0.8 [0.767-0.832] |

| Study 4 | 0.641 [0.581-0.702] | 0.8 [0.764-0.835] |

Note that participants self-selected the use of RNGs or not, and that we did not preregister these analyses. Given the small number of results (11 versus 28), we did not statistically test the differences due to lack of statistical power, and only present descriptive results.

6.2.3 Discussion

We presented the first controlled study on detecting data fabrication at the level summary statistics. As far as we could tell, previous efforts only looked at group-level comparisons of genuine and fabricated data (Akhtar-Danesh and Dehghan-Kooshkghazi 2003), inspected properties of individually fabricated sets of data without comparing them to genuine data, or did not contextualize these data in a realistic study with specific hypotheses (Mosimann, Wiseman, and Edelman 1995). We explicitly asked researchers to fabricate results for an effect within their research domain (i.e., the anchoring effect), which was contextualized in realistic hypotheses, and compared them to genuine data on the same effect. We investigated the performance of the reversed Fisher method, variance analyses, combinations of these two methods, and statistically significant effect sizes to detect fabricated data.

Methods related to classifying statistically significant summary statistics (i.e., effect sizes and variance analyses) performed fairly well, whereas those relating to statistically nonsignificant summary statistics (i.e., \(p\)-value analyses) performed poorly. Non-preregistered results suggest that variance analyses performed similarly or marginally better than using statistically significant effect sizes in this sample. Hence, we recommend using methods that investigate statistically significant effects to detect potential data fabrication, but prior to their application their assumptions should be well understood and tested.

We noted that the assumption of homogeneous population variances in the variance analyses has not previously been explicated nor tested for robustness to violations. In Simonsohn (2013) it remains implicit that the variances grouped together in an analysis should arise from a homogeneous population distribution. Our results indicated that the classification performance of variance analyses may strongly depend on satisfying this assumption, that is, the performance of the method is not robust to violations of the homogeneity assumption. The alternative approach to variance analyses using the range of variances instead of their standard deviation (i.e., \(max_{z}-min_{z}\) rather than \(SD_z\)) seemed to be more robust to violations of the homogeneity assumption. This comparison was not preregistered and its performance could be studied further. Nonetheless, based on the success of using the dispersion of variances, we recommend to use variance analyses with subgrouping of variances into those that are likely to be from the same population distribution (e.g., based on anchoring condition in the datasets studied here) and also consider using the range of standard deviations \(max_{z}-min_{z}\)).

Of all methods we applied, we obtained the best performance using the heterogeneous variance analyses, which resulted in detecting 9 out of 39 fabricated data sets (23%) and no false positives (0; \(\alpha=.01\)). Performance using (only) the statistically effect sizes was comparably good. Consequently, we failed to detect the majority of the fabricated datasets using statistical methods based on nonsignificant \(p\)-values, consistency of variances, and effect sizes. More worrisome is that for many methods the false positive rate was high, in one case even 100% (using \(max_{z}-min_{z}\) based on the assumption of homogeneity of all variances).

Our finding that statistical analyses of data with fabrication detection tools may not be robust to violations of their assumptions has implications for investigations of research misconduct. Our results demonstrate that improper model specification can result in classifying anything as potentially fabricated (i.e., high false positive rate), which comes at high costs for all parties involved. Moreover, improper model specification may also result in a high false negative rate, as in our homogeneous variance analyses, resulting in a much too low \(AUROC\) values (e.g., \(AUROC=.264\)). Our sometimes high false positive and false negative rates are especially worrisome in light of widespread application of statistical methods to screen for potential problematic studies (e.g., Carlisle 2017a; Loadsman and McCulloch 2017), when their validation is based on the criterion that the methods proved useful to detect problematic data in isolated research misconduct cases the past (e.g., Carlisle 2012; Miller 2015; Carlisle and Loadsman 2016). For instance, the usefulness of the reversed Fisher method to detect problematic data in the past (Anonymous 2012; Levelt 2012) should not be taken as evidence of its validity for general application. Our study highlights the importance of validating methods with genuine reference data, before using these tools to flag potential problematic papers. Note that concerns like this have been expressed before (Kharasch and Houle 2017a; Mascha, Vetter, and Pittet 2017; Piraino 2017; Kharasch and Houle 2017b; Moppett 2017).

Our results warrant further research on the underlying assumptions and validity of statistical approaches to detect potential data fabrication using summary statistics. This further research can help determine or prevent model misspecification, both in the assumptions of the statistical models and the psychology theory for specific ways of fabricating data before standard application of these methods in practice (see also Carlisle 2017b).

For the reversed Fisher method that focused on the overly consistent results for effects that are expected to follow the null hypothesis, results indicated that participants did not fabricate excessive amounts of high \(p\)-values (i.e., closer to 1 than expected by chance) when told to fabricate statistically nonsignificant effects. This ran against our prediction that the absence of a true effect would prompt fabricators to fabricate results that do not contain enough randomness, resulting in too many high \(p\)-values. This is particularly noteworthy because this tenet has been helpful or even central to several known cases of research misconduct (Anonymous 2012; Levelt 2012). However, different from these specific cases, the results we asked participants to fabricate were first-order results (i.e., those immediately observable to the participants), whereas in the Stapel and Förster case, the reversed Fisher method showed potential data fabrication across second order results (i.e., similarity of means of experiments of different papers in the case of Stapel, or linearity test of first-order results in case of Förster). Hence, although our results indicate that the reversed Fisher method often does not perform well for inspecting first-order results, it may still perform well in isolated cases, particularly when applied to higher order results (see also Haldane 1948).

Results of our reversed Fisher method are inexact because we used dependent fabricated results, which we did not take into account in our analyses. More specifically, for the \(p\)-value analyses we analyzed the four \(p\)-values from (for example) the gender effect across the four fabricated studies for one participant. This might have violated the assumption of independence, hence may have resulted in biased results of this test. Neither our analyses of the effect sizes nor our variance analyses suffer from this issue.

Analyses combining different data fabrication tools may not perform better than analyses based on a single tool, which also has implications for research misconduct investigations. First, a fabricated dataset does not imply that all tools should hint at data fabrication; fabricated data may resemble genuine data in some respects but not in others. Second, focusing on one aspect that best distinguishes fabricated from genuine data may perform best. The problem is then to identify that aspect, preferably before conducting the investigation. Our study suggests to focus on the analysis of properties of statistically significant effect sizes, whereas some fraud cases suggested to focus on properties of statistically nonsignificant effect sizes. We recommend, in cases of multiple independent possibly fabricated studies, to use several tools to identify possible fabrication in one study, and then apply and test the tools that worked to the other possibly fabricated studies (cross-validation). Importantly, we wish to emphasize that it does not make sense to require that all tools signal fabrication; as fabricated data may resemble genuine data in some respects, absence of one or several signals should not be considered as evidence of no fabrication.

We also considered the possibility that the use of a Random Number Generator (RNG) to fabricate summary statistics could decrease the probability of detecting a fabricated dataset. Although we did not preregister these analyses, descriptive results suggest that using RNGs decreases the performance of using effect sizes to classify fabricated from genuine data. On the other hand, using RNGs did not substantially decrease the performance of the variance analysis that analyzed the effect sizes bearing on anchoring. Note that our results are solely descriptive due to too small group sizes for meaningful comparisons. We will investigate in Study 2 whether using RNGs affects the performance of detecting data fabrication in a similar fashion and revisit this issue in the general discussion.

We note that our presented results might be particular to the anchoring effect and not replicable with other effects. First, as opposed to many other effects in psychology, many data on the anchoring effect are already available and fabricators may have used these data when fabricating theirs. Second, fabrication strategies may be dependent on the type of effect or measurement that is being fabricated. In the anchoring studies, data needed to be fabricated for numbers that are in the hundreds or thousands. Such relatively large values might feel more unintuitive to think about than smaller numbers in the singles or tens that might appear in other research contexts. Hence, we might be better at detecting potential data fabrication in data of our study compared to most other studies because of this increased lack of intuitiveness. Other kinds of studies that are easier for fabricators to think about in terms of fabricating realistic data might prove more difficult to classify. For example, fabrication of data of Likert scales may be more difficult (or easier) to detect than fabrication of continuous data.

Despite testing various statistical methods to detect data fabrication, we did not test all available statistical methods to detect data fabrication in summary statistics. SPRITE (Heathers et al. 2018), GRIM (Brown and Heathers 2016), and GRIMMER (Anaya 2016) are some examples of other statistical methods that test for problematic or fabricated summary statistics (see also Buyse et al. 1999). However, these methods were not applicable in the studies we presented, because they require ordinal scale measures. It seems that, combined with the question of whether current results of detecting fabricated data replicate in Likert scale studies, validating these other methods would be a fruitful avenue for further research.

6.3 Study 2 - detecting fabricated individual level data

In Study 2 we tested the performance of statistical methods to detect fabrication of individual level (or raw) data. Our procedure is comparable to that used in Study 1: We again asked actual researchers to fabricate data that they thought would go undetected. However, instead of summary statistics, in Study 2 we asked participants to fabricate lower level data (i.e., individual level data) and included a face-to-face interview in which we debriefed participants on how they fabricated their data (Hartgerink et al. 2017). A preregistration of this study occurred during the seeking of funding (Hartgerink, Wicherts, and Assen 2016) and during data collection (https://osf.io/fc35g). Just like Study 1, this study was approved by the Tilburg Ethics Review Board (EC-2015.50; https://osf.io/7tg8g/).

To test the validity of statistical methods to detect data fabrication in individual level data, we investigated individual level data of the classic Stroop experiment (Stroop 1935). In a Stroop experiment, participants were asked to determine the color a word is presented in (i.e., word colors) and where the word also reads a color (i.e., color words). The presented word color (i.e., ‘red’, ‘blue’, or ‘green’) can be either presented in the congruent color (e.g., ‘red’ presented in red) or an incongruent color (e.g., ‘red’ presented in green). The dependent variable in a Stroop experiment is the response latency, typically in milliseconds. Participants in actual Stroop studies are usually presented with a set of these Stroop tasks, where the mean and standard deviation per condition serve as the individual level data for analyses (see also Ebersole et al. 2016). The Stroop effect is often computed as the difference in mean response latencies between the congruent and incongruent conditions.

6.3.1 Methods

6.3.1.1 Data collection

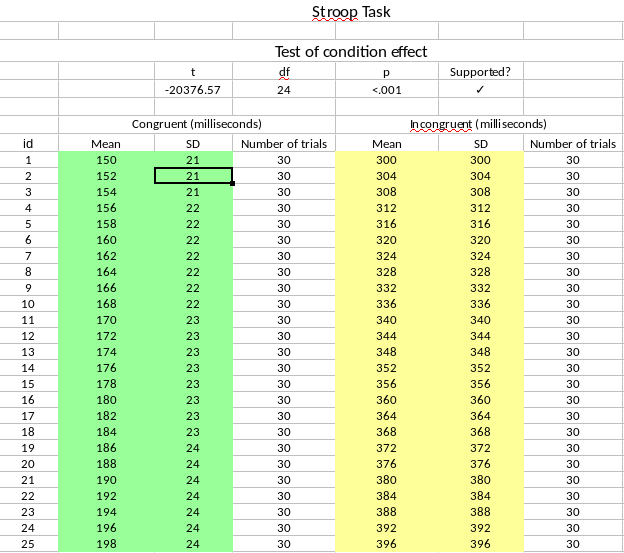

We collected twenty-one genuine data sets on the Stroop task from the Many Labs 3 project (https://osf.io/n8xa7/; Ebersole et al. 2016). Many Labs 3 (ML3) includes 20 participant pools from universities and one online sample (the original preregistration mentioned 20 data sets, accidentally overlooking the online sample; Hartgerink, Wicherts, and Assen 2016). Similar to Study 1, we assumed these data to be genuine due to the minimal individual gains for fabricating data and the transparency of the project. Using the original raw data and analysis script from ML3 (https://osf.io/qs8tp/), we computed the mean (\(M\)) and standard deviation (\(SD\)) of response latencies for each participant in both within-subjects conditions of congruent trials and incongruent trials (i.e., two \(M\)-\(SD\) combinations for each participant). This format was also the basis for the template spreadsheet that we requested participants to use to supply the fabricated data (see also Figure 6.7 or https://osf.io/2qrbs/). We calculated the Stroop effect as a \(t\)-test of the difference between the congruent and incongruent conditions (\(H_0:\mu_{\bar{X}_1-\bar{X}_2}=0\)).

Figure 6.7: Example of a filled out template spreadsheet used in the fabrication process for Study 2. Respondents fabricated data in the yellow cells and green cells, which were used to compute the results of the hypothesis test of the condition effect. If the fabricated data confirmed the hypotheses, a checkmark appeared (upper right). This template is available at https://osf.io/2qrbs.

We collected 28 fabricated data sets on the Stroop task in a two-stage sampling procedure. First, we invited 80 Dutch and Flemish psychology researchers who published a peer-reviewed paper on the Stroop task between 2005-2015 as available in the Thomson Reuters’ Web of Science database. We selected Dutch and Flemish researchers to allow for face-to-face interviews on how the data were fabricated. We chose the period 2005-2015 to prevent a decrease in the probability that the corresponding author would still be reachable via the given corresponding e-mail address. The database was searched on October 10, 2016 and 80 unique e-mails were retrieved from 90 publications. Two of these 80 researchers (2.5%) we contacted actually ended up participating in our study. Subsequently, we implemented a second, unplanned sampling stage where we collected e-mails from all PhD-candidates, teachers, and professors of psychology-related departments at Dutch universities. This resulted in 1,659 additional unique e-mails that we subsequently invited to participate in this study. Due to a malfunction in Qualtrics’ quotum sampling, we oversampled, resulting in 28 participants instead of the originally intended 20 participants. The second sampling scheme was not part of the original ethics application, but was considered crucial to obtain a sufficiently large sample.

Each participant received instructions on the data fabrication task via Qualtrics and was allowed to fabricate data until the face-to-face interview took place. In other words, each participant could take the time they wanted or needed to fabricate the data as extensively as they liked. Each participant received downloadable instructions (original: https://osf.io/7qhy8/) and the template spreadsheet via Qualtrics (see Figure 6.7; https://osf.io/2qrbs/). The interview was scheduled via Qualtrics with JGV, who blinded the rest of the research team from the identifying information of each participant and the date of the interview. All interviews took place between January 31 and March 3, 2017. To incentivize researchers to participate, they received 100 euros for participation; to incentivize them to fabricate (supposedly) hard to detect data they could win an additional 100 euros if they belonged to one out of three top fabricators (see Data Analysis section for exact method used). Participants were not informed about how we planned to detect data fabrication; we used the combined Fisher method (described next). JGV transcribed the contents of the interview and CHJH blind-reviewed these transcripts to remove any potentially personally identifiable information (these transcripts are freely available for anyone to use at https://doi.org/10.5281/zenodo.832490).

6.3.1.2 Data analysis

To detect data fabrication in individual level data using statistical tools, we performed a total of sixteen analyses per dataset (preregistration: https://osf.io/ecxvn/) for each of the 21 genuine datasets and 28 fabricated datasets. These sixteen analyses consisted of four Newcomb-Benford Law (NBL) digit analyses, four terminal digit analyses, two variance analyses, four multivariate association analyses (deviated from preregistration in that we used a parametric approach instead of the planned non-parametric approach), a combination test of these methods, and effect sizes at the summary statistics level (the latter test replicated Study 1 and was not preregistered). We had one dataset for each participant fabricating data and for each lab in the Many Labs study, amounting to 49 datasets.

For the digit analyses (NBL and terminal), we separated the 25 \(M\)s and 25 \(SD\)s per within-subjects condition and conducted \(\chi^2\)-tests for each per data set. As such, for one data set, we conducted digit analyses on the digits of (i) the mean response latencies in the congruent condition, (ii) the mean response latencies in the incongruent condition, (iii) the standard deviation of the response latencies in the congruent condition, and (iv) the standard deviation of the response latencies in the incongruent condition. For the NBL, we used the first (or leading) digit, whereas for the terminal digit analyses we tested the same sets but on the final digit.